Please enjoy the free portion of this monthly investment newsletter with our compliments. To get full access, you may want to consider an upgrade to paid for as little as $12.50/month. As an added bonus, paid subscribers also receive our weekly ALPHA INSIGHTS: Idea Generator Lab report, an institutional publication that provides updated market analysis and details our top actionable ETF idea every Wednesday. Thanks for your interest in our work!

Executive Summary

Navigating the AI Cycle in a Post-DeepSeek World

Macro Perspectives: Such Great Heights

Geopolitics: Won’t Get Fooled Again

Market Analysis & Outlook: The Circle Game

Conclusions & Positioning: Seven Nation Army

Navigating the AI Cycle in a Post-DeepSeek World

On Sunday, January 26th, news stories began to surface suggesting that China had entered the Gen-AI race in a big way. An AI startup, known as DeepSeek, developed its own version of a ChatGPT-like chatbot called the R1. Its open source code makes it freely available for anyone to use and examine. Since its release nearly a month ago, the initial reviews of the technology have been overwhelmingly positive. DeepSeek was founded in China by Liang Wenfeng, co-founder of the quantitative hedge fund High-Flyer, and is wholly owned by the fund. On Monday, January 27th, investors learned the hard way that DeepSeek’s R1 had dethroned OpenAI’s ChatGPT as the most downloaded free app in the U.S. on Apple’s App Store. In response, the Nasdaq closed the day down 3%, led by a 17% plunge in Nvidia’s shares.

Importantly, DeepSeek’s R1 was built despite the U.S. curbing chip exports to China three times in the last three years. There are varied estimates as to what DeepSeek spent to develop its R1 platform, or how many GPUs went into it, but reports agree that the R1 has a training cost of approximately $5.6 million per run. Regardless of the specific numbers, the consensus view is that the R1 was developed at a fraction of the cost of rival models by OpenAI, Anthropic, Google, Meta, and others. How was this possible you ask? Necessity is the mother of invention.

The U.S. approach to developing Gen-AI has been one of brute force, driven by spending hundreds of billions of dollars on high performance hardware and power infrastructure. This strategy was perceived to have created a competitive moat around the so-called hyperscalers (primarily MSFT, AMZN, GOOG, ORCL, and META) — establishing a virtual oligopoly. The narrative was simple: you had to be able to pay tens, if not hundreds of billions of dollars for infrastructure in order to play. But for Chinese AI startups such as DeepSeek, the pay-to-play model was simply not an option — whether they could afford it or not. As such, they were forced to approach the problem from a different angle. Their approach was to use clever software instead of powerful hardware. It appears to have worked, leading Wall Street to rethink the economics of Gen-AI.

The use of software to achieve results only previously achieved by using billions of dollars worth of high-performance hardware is a game changer. It’s the classic disruptive event. The old AI value chain is no longer sacrosanct. It also opens the door to myriad sources of competition, as well as an increased potential for malfeasance. Case in point, the terms of use for downloading DeepSeek’s platform are akin to inviting a vampire into your home. Heretofore, the consensus has viewed the future of Gen-AI only through the lens of its beneficence. Yet, we now see a strong potential for this attitude to shift as foreign competition proliferates, and the path of the righteous man is beset on all sides by the inequities of the selfish and the tyranny of evil men.

This all leaves investors — not to mention leveraged speculators — in a bit of a conundrum. As of now, they no longer know where they are in the AI cycle. They are, in a sense, lost at sea. Our title, “Dead Reckoning,” is a nautical term that refers to calculating one’s position based upon their last known location, speed and direction — essentially navigating without precise instruments, and relying solely upon judgement and intuition. This is the new reality of mega-cap growth/AI investors for the foreseeable future. During the sixteenth, seventeenth, and eighteenth centuries, a naval or merchant ship’s captain heading from a European port to the East Indies would set his compass due west along a specific latitude and simply sail until he saw land. Once he found the coastline, he could adjust his course to the north or south in order to reach a specific destination based upon the latitudes illustrated on his charts.

For a ship’s captain, calculating his latitude while at sea was fairly straightforward. Using an instrument known as an astrolabe, he would simply measure the angle between the sea’s horizon and the sun at midday, or the North Star at night. This allowed him to determine his position north or south of the equator with a high degree of accuracy by measuring the height of the celestial body in relation to the horizon. Conversely, until John Harrison perfected the marine chronometer (H-4) in 1760, an undertaking that literally consumed forty years of his life, that same ship’s captain was unable to precisely calculate his longitude once at sea. The best option for attempting to determine the distance east or west of the prime meridian was to employ the dead reckoning method. This required the captain to throw a log overboard and observe how quickly the ship receded from this temporary guidepost. He would then note the crude speedometer reading in his logbook along with the direction of travel, which he took from the stars or a compass, and the length of time on a particular course counted with a sandglass, or a pocket watch — neither of which were known for their accuracy. Factoring in ocean currents, unpredictable winds, and general errors in judgement, he would then estimate his current longitude.

For over three hundred years, from the time Columbus landed in the West indies in 1492, until 1828 when the British Board of Longitude was disbanded and the Longitude Act repealed, it is estimated that “thousands upon thousands” of ships were lost at sea. One would expect that storms, pirates, naval battles, and uncharted waters were the primary reasons for the majority of these maritime disasters, but that was not the case. The vast majority of shipwrecks occurred due to navigational errors in well-travelled and well-charted sea lanes. The number one error being the inability to precisely calculate a ships longitude while at sea. The result was that many ships arrived at their destination in the dark, or in a fog where the lack of visibility precluded the ship’s crew from sighting land before their ship hit the rocks. Most of these disasters resulted in a total loss of the ship, its cargo, and its entire crew.

Similarly, vast sums of capital have been lost by equity investors at the end of each bull market journey due to a different type of navigational error, not because they lack precise instrumentation, but because they are too arrogant to trust their instruments. They actually prefer the dead reckoning method. They prefer to roll the dice. Despite having accurate measures of valuation, and over 125 years of historical price data to study; despite having numerous periodic measures of momentum and breadth that accurately portray the internal health of the markets; and despite being able to model sector rotation shifts and relative strength trends and momentum of individual issue with relative precision, investors instead cling to narratives, behavioral biases, and the low state of needing to be right all the time. Historically, this has been their undoing — and will likely continue to be their undoing in the future. As the famed Wall Street plunger Jesse Livermore once wrote:

“There is nothing new in Wall Street. There can’t be because speculation is as old as the hills. Whatever happens in the stock market today has happened before and will happen again.”

Of course, early maritime explorers had a good excuse. They literally did not have the technology necessary to succeed. But, against all odds, they did so anyway on at least as many occasions as they failed. By 1760, Harrison’s H-4 would allow a ship’s captain to accurately calculate his longitude at sea based upon the amount of time elapsed during a voyage from any given home port. One degree of longitude equated to 4 minutes of time. Each hour equated to 15 degrees of longitude. Using a sextant, a ship’s captain or navigator would measure the angle between the sea’s horizon and a celestial body, then using a precision maritime chronometer — such as the H-4, he would note the precise time, which he then translated from the ship’s astronomical tables to determine the exact longitudinal position east or west of Greenwich, the “Prime Meridian,” as established by the British crown.

The Longitude Act was established in 1714, in response to one of the greatest maritime disasters in British naval history. On the foggy night of October 22, 1707, a task force of five ships commanded by Admiral Sir Clowdisley Shovell was in the final leg of its return voyage home, victorious from a battle in the French Mediterranean. Three of the five ships hit the rocks of the Scilly Isles just 20 nautical miles southwest of Cornwall, England. To their horror, Stovell and his captain’s had misgauged their longitude. The Scilly Isles became the unmarked tombstones for two thousand British troops. The flagship Association struck first and sank within minutes, drowning all hands. Before the rest of the ships could take evasive action, two more ships, the Eagle and the Romney, ripped across the rocks and went down like stones. In all, four of the five ships were lost.

The Longitude Act declared that a purse of £20,000 would be awarded to anyone who could solve the “longitude problem.” Harrison, a carpenter turned clockmaker, viewed it as a mechanical problem and sought to solve it with a superior device. British Royal Astronomer Nevil Maskelyne viewed it as a mathematical problem, and advocated for a different approach than Harrison’s expensive H-4 chronometer (at an average cost of £500 per replica). While precise time was the solution, the H-4 was not practical given that it took Harrison nearly 40-years of toiling and more than £500 to produce his masterpiece after three prior attempts fell short of the goal. Moreover, it would take the best watchmakers in England a minimum of four months to produce a single replica of the H-4 at a minimum cost of £200 (about $50,000 today). Rather than outfit every ship in the British merchant and naval fleets with this very expensive, yet exceptional waterproof, temperature adaptive, corrosion resistant, precise time keeping device, he proposed to calculate the ship’s longitude by triangulating its position using multiple celestial bodies. He produced an extensive book of all known celestial bodies and events titled simply, The Nautical Almanac. He then proposed to teach each ship’s captain and navigator the intricate mathematics of trigonometry, so that they could calculate their ship’s longitude using the The Nautical Almanac and without the need for a mechanical time keeping device.

But there were many problems with Maskelyne’s method that made it impractical. First, the sky was not always clear. Second, many celestial bodies were only visible during certain months of the year, and from specific vantage points. Third, there were literally many hundreds, if not thousands of ships, and a like amount of sea captain’s to be trained. How would that be done? And what if they were unable to learn the higher university level mathematics (mastered at that time by only a select few of the most brilliant mathematicians in history)? And finally, it took Maskelyne himself upwards of four hours to take the required astronomical measurements and complete the complicated mathematical calculations necessary to arrive at a longitudinal fix — and often times he found errors in his own calculations. How would this be possible when it was sometimes necessary to get a position fix up to six times in a single day? When would the navigator sleep?

Correspondingly, there are many problems with the brute force method of Gen-AI development: Limited competition for one; Access to cutting-edge processing technology for another; A third is access to sufficient cost effective electrical power. These problems can all be overcome with an investment in infrastructure over time by the select few whose balance sheets can support it. Eventually, as was the case in Harrison’s day, even limited competition will lead to innovation, ultimately driving costs lower. That, of course, made it possible for all of the world’s navies and merchant fleets to become sufficiently outfitted with state-of-the-art marine chronometers by as early as 1831, when the HMS Beagle carried British naturalist Charles Darwin to the Galapagos Islands.

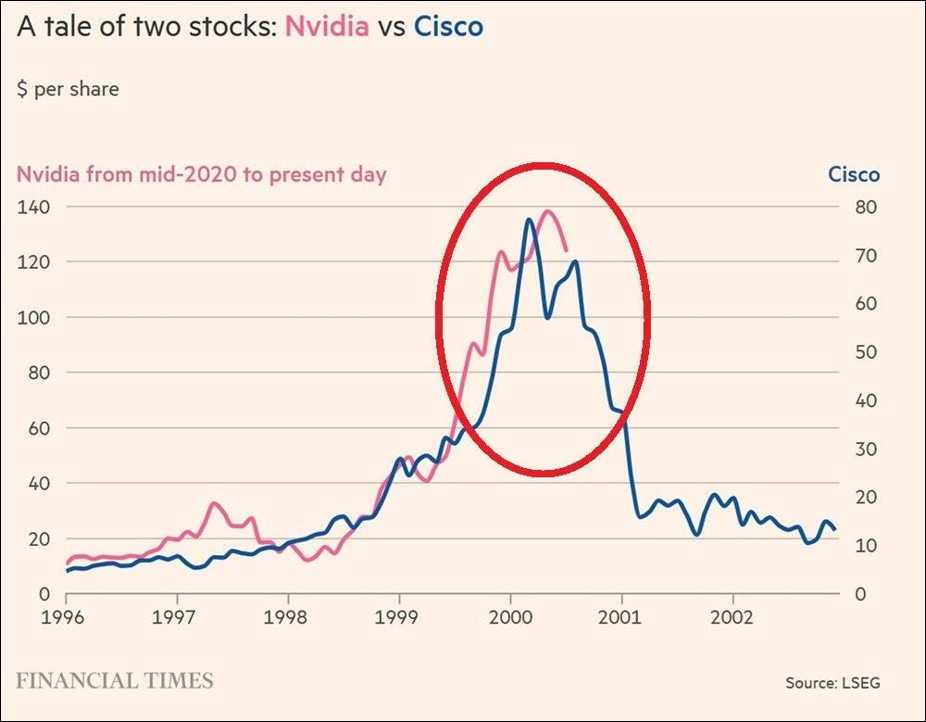

Indeed, the open source nature of the DeepSeek platform has already allowed other AI developers to replicate the performance of R1 — and in the case of UC Berkeley, improve upon it, thus lowering the cost of training to a mere $450. Whether or not DeepSeek perseveres as a leading competitor is now irrelevant. The approach of using software as the primary tool to develop Gen-AI platforms is here to stay. As such, the implications for Gen-AI development in the U.S. are enormous. Not only does this “Sputnik moment” open the door to broader competition, but it calls into question the future demand picture for high-powered GPUs — such as those produced by Nvidia. As discussed in Issue #40, NVDA shares have made no net progress since the Semiconductor index (SOX) topped last July. To be sure, expectations had likely reached unattainable levels by November, when a story detailing “The Nvidia Way” made the cover of Barron’s magazine. Yet, the relative performance of the SOX peaked in June of last year, and it has since underperformed the S&P 500 by some twenty percentage points. In fact, since at least September, fewer than half the issues that make up the SOX have been trading above their 200-DMA.

In our opinion, a cheaper, more practical solution to the problem of Gen-AI has presented itself. The smart money has known of this potential for the better part of the last six to eight months. For a brief moment in time, NVDA held the top spot as the most valuable company in the world. But success is fleeting. We suspect that NVDA’s days in the limelight are now behind us, and that last week’s decline may have further cemented the potential for a new bear market in mega-cap technology shares to take a firm hold and eventually pull the rest of the market down with it.

Substack Community Spotlight

The Substack platform has grown into a hub for highly credible financial analysts and investment strategists to publish their insights and perspectives. High quality research and analysis, previously reserved only for institutions, is now available for public consumption. We thought that it might be helpful to our readers if we highlighted a few of the publications that we find to be of interest. Below are three newsletters from other independent thinkers that you might find interesting too:

Asbury Research Chart Focus: Emphasizing the key charts that illustrate the health and trajectory of the market.

The Weekly S&P 500 #ChartStorm: Carefully hand picked charts with clear commentary on why they matter and how they fit together.

The Market Mosaic: Identifying trend-ready stocks.

Macro Perspectives: Such Great Heights

The return on investment (ROI) for Gen-AI investments is non-existent, and in most cases today, it’s negative. This is considered tolerable for growth investors when the spending is pre-revenue. But the massive capex spending budgets dedicated to Gen-AI infrastructure development plans in 2025 is expected to reach $200 billion or more. Historically speaking, when China enters a market, margins almost immediately go to zero. DeepSeek purportedly spent only $50 million on 2,048 older Nvidia model H800 GPUs to develop their large language model (LLM). This compares to tens of billions of dollars already spent on data center infrastructure by the U.S. hyperscalers.

Both DeepSeek and Open AI disclose pricing for their LLM computations on their respective websites. DeepSeek says their “V3” — the LLM that supports R1 — costs 55 cents per 1 million tokens of inputs and $2.19 per million tokens of output (“tokens” refers to each individual unit of text processed by the model). By comparison, OpenAI’s pricing page for their “o1” — the LLM that supports GPT4 —shows the firm charges $15 per 1 million input tokens and $60 per 1 million output tokens. That’s 27x more expensive than the new Chinese alternative!

What will growth investors do once they find out that the ROI for Gen-AI will remain at zero, or worse. To what extent will data center investments dilute the current high profit margins of the Mag-7? What multiple will growth investors be willing to pay for REIT-like returns? We suspect that it will be a lot less than they are paying today.

Let’s take a closer look at the cost-benefit analysis of the current Gen-AI development protocol. To begin, it is noteworthy that even Nvidia agrees that DeepSeek’s R1 is “an excellent AI advancement and a perfect example of Test Time Scaling,” as per an Nvidia spokesperson. But in a clear attempt to put a positive spin on the news of a major competitive threat to its high-performance GPU monopoly, the Nvidia spokesperson continued: